All's Well That Ends Well

We’ve been having some stability problems of late. In our rush to get some minimal version of the document ingestion pipeline up and running, we created a number of tools for gathering corpus statistics and configuring term tables and we built an interactive REPL console to help our readers better understand the system. These tools are mostly system integrations, and as such, are not covered by unit tests. In recent days we’ve found these integrations to be broken more often than working.

While it feels great to be pouring a lot of concrete, we’ve decided to pause in order to shore up our foundations. One focus is to develop tests for the integration code.

The challenge with writing these tests stems from file system operations.

The BitFunnel statistics command, for example, reads a number of configuration files

and BitFunnel chunks from disk, and then after a bit of computation

writes out a bunch of intermediate files like histograms and document frequency tables.

As we continue to bring over new modules and functionality, the number of

configuration, data, and intermediate files will only grow.

We could just write tests that rely on the filesystem, but as a general rule, I avoid writing tests that access the filesystem. My rationale relates to system configuration, developer data safety, and test brittleness. Let’s consider at each of these.

System Configuration In order for a test to access a file, the system must be configured correctly. This means that the test needs to know the path to the file and, in the case of a read operation, the file itself must exist and have the right permissions. In the case of a write operation, the path to the file must exist or be created and the test needs some policy for handling the case where it wants to overwrite an existing file (such as the partial file left over from the previous test run which crashed).

All of these problems are small. We could easily add a step to the README.md instructing the developer to setup an environment variable with the path to the test files. We could add a post-build step that creates the right directories and copies the required data files. We could use an OS specific temp directory generator. We could run tests in containers. All of these would work - the problem is that they add up over time to make the project hard to use.

Our goal is ease of use - the ideal onboarding experience is to clone the repo, install one or two tools (like the C++ compiler and CMake) and then kick off a build that works 100% of the time.

Data Safety

There’s another problem with tests that access the filesystem and I’ve been

bitten by this more than once. What happens is some well intentioned developer

writes piece of code that “cleans” the test directory in preparation for the

next test run. Then through a combination of string handling bugs or poorly

chosen file names, real files, having nothing to do with the test, end up getting

clobbered. Or maybe the test itself overwrites that great American novel draft

you’ve been working on for years. These situations always lead to tears and usually

the well intentioned developer suggests it was your fault for storing important

files in whatever directory seemed like an obvious choice for test output.

Or it was your fault for not setting $TEMP before the test did an rm -rf $TEMP/*.

Test Brittleness Let’s face it - working with files is hard. It is not because the code is complex - the problem is that files are outside of the process sandbox. Anyone can mess with a file. Maybe a virus checker quarantined your file. Maybe a previous test run was done with elevated permissions and now the current test run can’t overwrite the old files. Maybe you didn’t escape characters properly in the file name or you generated a temp path that was too long. Maybe a zombie process is holding a write handle. Maybe it works on the PC, but not the Mac.

There’s a million reasons why tests that interact with the filesystem become brittle. We want developers to run tests early and run tests often. If the tests are fast and 100% reliable, we will enter a virtuous cycle. If the tests are flakey or slow, developers will stop running them and relying on them and we end up in a vicious cycle.

The Bard Comes to My Rescue

Developing self-contained integration tests that don’t hit the filesystem will take some time. My first challenge is to find a replacement for the 17k Wikipedia pages we’re using for today’s tests. My criteria for the test corpus is

- Small enough to embed in a C++ source file.

- Large enough to support interesting scenarios.

- Permissive license compatible with the MIT License.

- Text makes some amount of sense to humans.

What I finally came up with was Shakespeare’s Sonnets. There are 154 of them, they fit into about 172Kb, they are plain text, and they are in the public domain.

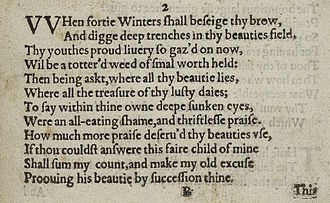

My next step was to convert the Bard’s immortal words into C++ code. Here’s his source code, from the 1609 quarto entitled “SHAKE-SPEARES SONNETS. Never before Imprinted.”

Fortunately Project Gutenberg did the heavy lifting, converting scans of the original text to ASCII while updating the spelling:

When forty winters shall besiege thy brow,

And dig deep trenches in thy beauty’s field,

Thy youth’s proud livery so gazed on now,

Will be a tatter’d weed of small worth held:

Then being asked, where all thy beauty lies,

Where all the treasure of thy lusty days;

To say, within thine own deep sunken eyes,

Were an all-eating shame, and thriftless praise.

How much more praise deserv’d thy beauty’s use,

If thou couldst answer ‘This fair child of mine

Shall sum my count, and make my old excuse,’

Proving his beauty by succession thine!

This were to be new made when thou art old,

And see thy blood warm when thou feel’st it cold.

At this point my job was to tokenize the text and then write it out as C++ string literals in BitFunnel chunk format. I could have used the Workbench Tool or Lucene, but these seemed like giant hammers for a really small nail. In the end I wrote a little Node.JS app to do the work.

The process was mostly straight forward. I did need to use some care with the single quotes. Sometimes a single quote was used in a contraction like “feel’st” or “tatter’d” or a possessive like “beauty’s” or “youth’s”. In these cases, I wanted to keep the quote are part of the token.

Other times the single quote was used to demarcate a phrase, as in

‘This fair child of mine

Shall sum my count, and make my old excuse,’

These quotes should never be part of a token. My strategy was to

first replace all of the interesting quotes with a sentinel character.

I used the '#' character since it didn’t appear elsewhere in the corpus.

Once these quotes were safely marked, I removed all of the remaining quotes

and other punctuation. Then I replaced each '#' with a single quote.

Here’s the code the I used to clean and tokenize each line.

function ProcessLine(input) {

// Convert input to lower case.

var a = input.toLowerCase();

// Use hash to temporarily mark single quotes used in contractions.

var b = a.replace(/(\w)'(\w)/g, "$1#$2");

// Remove all punctuation, including remaining single quotes.

var c = b.replace(/[,.!?:;']/g, "");

// Convert contraction markers back to single quotes.

var d = c.replace(/#/g, "'");

// Replace spaces with word-end markers.

var e = d.replace(/[ ]/g, "\\0") + "\\0";

return e;

}

Outputting the C++ code was mostly straightforward.

The only hitch involved concatenating octal escape codes with Arabic numerals

in the C string literals. Take a look at the sample output below. The third

line, "01\0Sonnet\0" "2\0\0" had to be broken into two adjacent string

literals in order to keep the "\0" after "Sonnet" from concatenating with

the "2" to form the octal literal "\02". Fortunately this situation only

appeared in the titles so it was easy to special case the treatment.

char const * sonnet2 =

"0000000000000002\0"

"02\0https://en.wikipedia.org/wiki/Sonnet_2\0\0"

"01\0Sonnet\0" "2\0\0"

"00\0"

"when\0forty\0winters\0shall\0besiege\0thy\0brow\0"

"and\0dig\0deep\0trenches\0in\0thy\0beauty's\0field\0"

"thy\0youth's\0proud\0livery\0so\0gazed\0on\0now\0"

"will\0be\0a\0tatter'd\0weed\0of\0small\0worth\0held\0\0"

"then\0being\0asked\0where\0all\0thy\0beauty\0lies\0"

"where\0all\0the\0treasure\0of\0thy\0lusty\0days\0\0"

"to\0say\0within\0thine\0own\0deep\0sunken\0eyes\0"

"were\0an\0all-eating\0shame\0and\0thriftless\0praise\0"

"how\0much\0more\0praise\0deserv'd\0thy\0beauty's\0use\0"

"if\0thou\0couldst\0answer\0this\0fair\0child\0of\0mine\0"

"shall\0sum\0my\0count\0and\0make\0my\0old\0excuse\0"

"proving\0his\0beauty\0by\0succession\0thine\0"

"this\0were\0to\0be\0new\0made\0when\0thou\0art\0old\0"

"and\0see\0thy\0blood\0warm\0when\0thou\0feel'st\0it\0cold\0"

"\0\0";

Putting it all together.

Translating a small corpus into C++ string literals was a good first step. Making the end-to-end integration test required that I also virtualize all of the filesystem interactions, but this is a tale for another post.

One nice outcome of this work is that the build now generates an example that automatically configures and runs an index with no requirement to download corpus files.

It’s called TheBard and what it does is run the corpus statistics gathering

stage on the sonnets, then builds a TermTable, and then boots up a interactive

BitFunnel REPL console.

There are only a few command-line arguments and they happen to be optional.

% TheBard -help

TheBard

A small end-to-end index configuration and ingestion example based on

154 Shakespeare sonnets.

Usage:

./TheBard [-help]

[-verbose]

[-gramsize <integer>]

[-help]

Display help for this program. (boolean, defaults to false)

[-verbose]

Print information gathered during statistics and termtable stages.

(boolean, defaults to false)

[-gramsize <integer>]

Set the maximum ngram size for phrases. (integer, defaults to 1)

Here’s a sample session. In this case I didn’t supply the -gramsize parameter

so we’ll be working with an index of unigrams.

% TheBard

Initializing RAM filesystem.

Gathering corpus statistics.

Building the TermTable.

Index is now configured.

Welcome to BitFunnel!

Starting 1 thread

(plus one extra thread for the Recycler.)

directory = "config"

gram size = 1

Starting index ...

Index started successfully.

Type "help" to get started.

0: help

Available commands:

cache Ingests documents into the index and also stores them in a cache

for query verification purposes.

delay Prints a message after certain number of seconds

help Displays a list of available commands.

load Ingests documents into the index

query Process a single query or list of queries. (TODO)

quit waits for all current tasks to complete then exits.

script Runs commands from a file.(TODO)

show Shows information about various data structures. (TODO)

status Prints system status.

verify Verifies the results of a single query against the document cache.

Type "help <command>" for more information on a particular command.

1: cache manifest sonnets

Ingestion complete.

2: show rows blood

Term("blood")

d 000000000000000000000000000000000000000000000000000000

o 000000000111111111122222222223333333333444444444455555

c 123456789012345678901234567890123456789012345678901234

RowId(3, 1111): 011000000010000001100000000000000000000000001000000000

RowId(0, 1278): 010000000010000000100000000000000000000000000000000001

3: verify one blood

Processing query " blood"

DocId(121)

DocId(109)

DocId(82)

DocId(67)

DocId(63)

DocId(19)

DocId(11)

DocId(2)

8 match(es) out of 154 documents.

4: show rows shame

Term("shame")

d 000000000000000000000000000000000000000000000000000000

o 000000000111111111122222222223333333333444444444455555

c 123456789012345678901234567890123456789012345678901234

RowId(3, 1058): 110000011100000000000000000000100111000000000000000000

RowId(0, 1225): 110000011100010000000000000000000101000000000000000000

5: verify one shame

Processing query " shame"

DocId(129)

DocId(127)

DocId(99)

DocId(95)

DocId(72)

DocId(36)

DocId(34)

DocId(10)

DocId(9)

DocId(2)

10 match(es) out of 154 documents.

6: show rows tatter'd

Term("tatter'd")

d 000000000000000000000000000000000000000000000000000000

o 000000000111111111122222222223333333333444444444455555

c 123456789012345678901234567890123456789012345678901234

RowId(3, 3349): 010000000000000000000000010000000000000000000010000000

RowId(3, 3350): 010000000000000000000000010000000000000000000010000000

RowId(3, 3351): 010000000000000000000000010000000000000000000010000000

RowId(0, 1440): 010010010000000000010000010011011000000000000000000000

7: verify one tatter'd

Processing query " tatter'd"

DocId(26)

DocId(2)

2 match(es) out of 154 documents.

8: show rows love

Term("love")

d 000000000000000000000000000000000000000000000000000000

o 000000000111111111122222222223333333333444444444455555

c 123456789012345678901234567890123456789012345678901234

RowId(0, 1019): 000000001100101000111110110010111111101101001110101000

At prompt 1, I enter cache manifest sonnets which loads all 154 sonnets

into the index. I could have used cache chunk sonnet0 to load only the

first chunk of 11 sonnets. Note that I used the cache command, instead

of the load command. The difference is that the cache command also

saves the IDocuments in a separate data structure that can be used to verify

queries processed by the BitFunnel engine.

In prompts 2 and 3, I examine the rows associated with the word, “blood”

and then run a query verification to see which documents should

match. The show rows command lists each of the RowIds associated with a term,

followed by the bits for the first 64 documents. The document ids are printed

vertically above each column of bits. In this example, we can see that

documents 2, 11, and 19 are likely to contain the word “blood” because

their columns contain only 1s. The verify one command confirms these

columns are, in fact, matches, and not false positives.

Prompts 4 and 5 repeat the experiment with the word, “shame”. This time we

see what appear to be matches in columns 1, 2, 8, 9, 10, 34, and 36.

The verify one command shows that columns 1 and 8 are not actually

matches, but instead correspond to false

positives.

Prompts 6 and 7 show, “tatter’d”, a word that is considerable more rare than “blood” and “shame”. Because “tatter’d” is rare, it requires four rows to drive the noise down to acceptable levels.

Constrast this with prompt 8 which looks at the word, “love”. Love appears in so many documents that it must reside in its own, private row.